Powering innovation with HPC

Access to academic facilities like the Emerald supercomputer is vital to the success of new start ups in the High Performance Computing field, says Jamil Appa, co-founder of Simulation on Demand startup Zenotech.

Appa left a successful career at BAE Systems two years ago, in order to set up on a new business together with co-founder David Standingford — bringing together over 30 years of computational engineering experience. After years creating software for his internal customers, Appa and Standingford could see much that would be of use to engineers in other businesses – and could also see the barriers to entry that those businesses faced with the products that were then available to them.

“The tools that are available in the commercial space have high licensing costs – you have to buy a ‘seat’, even if you would just use a product for a couple of weeks a year – and they are perceived as very complicated to use. There are lots of engineers who would benefit from using aerospace grade tools with a flexible licensing policy, so that was the aim of the business we set up,” Appa says.

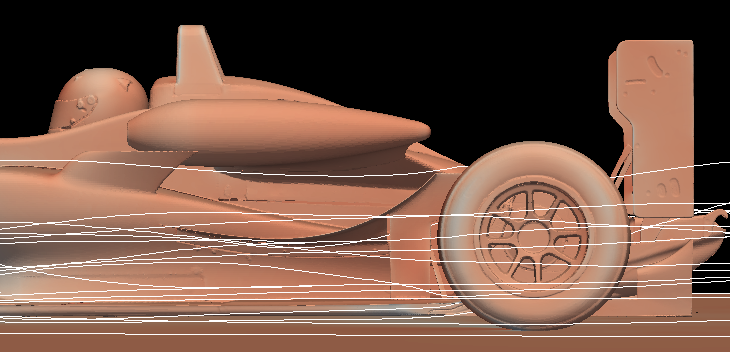

Zenotech has developed an easy-to-use GPU accelerated high performance computational fluid dynamics solver, zCFD, offering unlimited resources on an on-demand payment model.

To develop and test this sort of software takes serious resources – and needs both access to an array of GPUs and true scalability.

“It’s been invaluable. When we started, there was no other resource in the UK that could provide GPUs at that sort of scale. Plus, if I had had to pay full commercial rates to test at scale, I’d be investing a huge amount in development. That’s one of the major barriers to the whole area of HPC software development – the infrastructure needed. And not just for testing but just for proving things. It might be the case that a particular algorithm just doesn’t work at a given scale, or that a problem just can’t be solved. And the cost of finding that out, for an SME like ours – we were just two people until we recently hired our first employee – well, it would just be an impossibility,” he says.

The testing on Emerald wasn’t always straightforward, and sometimes involved working directly with hardware manufacturers, including Nvidia, to iron out the glitches. In the long term, Appa hopes to run his own zCFD software for clients, and also to offer on-demand access to HPC cloud resources for clients who need a fast, scalable system.

“I want to offer a choice. If clients want a cheap as chips option from AWS, we can offer that. But others will want access too. I have a good relationship with them and could work directly with the technical team, which made things easier. But I always made sure the Emerald sys admins were aware of what was happening, so that they could implement changes and learn from each hiccup that we solved,” Appa says.

GPUs at scale, or simply need more hand holding, in which case that might be Emerald. We can offer full support – and the whole spectrum in between. Some people will want more reliability, and others will just want a quick look at something at scale.” Appa hopes to work with a range of vendors, each of them “carving out their own special offerings in that space, and Emerald is very much part of that. For clients wanting to use GPUs, we need that resource. You can get access to GPUs in Amazon, yes, but those systems aren’t architected to use this sort of large software at scale.

“We wouldn’t be where we are today without having access to facilities like Emerald, both for product development, and for the service we want to offer our clients,” he says.

The authors would like to acknowledge that the work presented here made use of the Emerald High Performance Computing facility provided via the Science and Engineering South Consortium in partnership with STFC Rutherford Appleton Laboratory.